To build a house you have to start with a solid foundation, I hate that saying but it’s true. It applies to VDI just the same: to have an efficient VDI environment you MUST have consistent gold images. The term gold image is what I use to base all of my View Pools on. I have a 3 step process to building my gold images:

- Create OS Template

- Create Gold Image

- Install Core Company/Pool Specific Software

Before I start with building my Pool Specific Gold Images I start with a Base Operating System Template for vCenter, this allows me to clone for Gold Images, Dedicated VM’s or Test Boxes with all specific Tools, Agents and other items that must be installed for a View Environment. This is the most important step in the process because this truly is the foundation for all future Gold Images in my environment. Let’s get to work!

Create OS Template

In vSphere I create a new virtual machine called “Win7Gold”, I select “Custom” configuration with the following specs:

- Name your VM

- Choose an Inventory Location

- Choose Host/Cluster Location

- Choose Resource Pool (If DRS is enabled)

- Select a Storage Resouce (Local or Shared Storage)

- Virtual Machine Version (since I am running ESXi 5.5 I choose the latest)

- Guest OS Version (For this example I am using Windows 7 x64)

- CPU Sockets/Cores – 2vCPU

- Memory – 3GB (View Best Practices say 3GB minimum)

- Network – Choose Network and Adapter – VMXNet3

- SCSI Controller – LSI Logic SAS

- Create a new virtual disk (unless importing from an existing disk)

- HDD – 32GB Thin Provision (This is a template, no need to build out the whole disk)

vCenter will now build out the base VM, time to edit some hardware!

- Remove Floppy (You can always add to a specific Gold Image later if needed)

- Select “Options” tab and review the Boot Options

- Select “Force BIOS Setup”

- Power on VM and Open VM Console

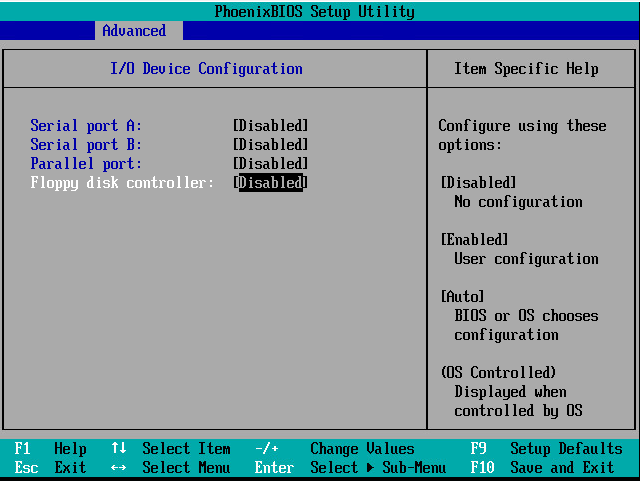

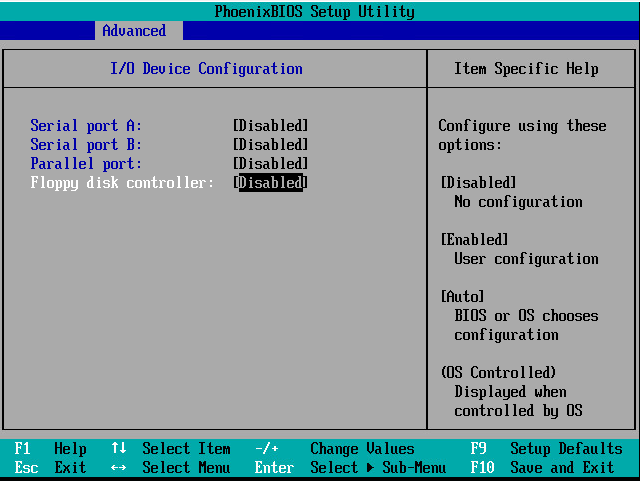

- VM will boot into BIOS, select Advanced Tab

- Disable all of those ports (You can always add to a specific Gold Image later if needed)

- Save and Exit

- Back in vCenter – Edit VM Settings and Mount OS Media ISO (Make sure to check “Connected” and “Connect at Power On” and install your Operating System

- Once Windows has finished installing we need to install some VMware packages before anything else because of conflicts with .Net Framework and View Agent

Next step is to optimize Windows for Horizon View, VMware has a great guide for optimizing including a script to run, I am a little more visual and discovered VMware Flings. Flings has a great application called VMware OS Optimization Tool that can automate a lot of the tweaking for your OS Template, so let’s use it to tweak!

Once the Fling is installed let’s run an analysis and optimize everything except: Windows Firewall (for VMware Blast), Windows Update (latest and greatest updates) and Windows Search. Notice how only 4 are left? Everyone’s tweaks will be different but there are recommended and optional ones you can review!

Once you have optimized with Flings uninstall the OS Optimization Tool and lets get Microsoft Updates installed.

Once Windows gets all of it’s updates (I don’t install IE10) There is one last update that we need to install related to the VMXNet3 driver (KB2550978). After this you can go into Services and disable Windows Update. Once that is disabled we can delete the update leftovers from

C:\Windows\SoftwareDistribution\Download

After Windows Updates are finished there are some Windows Features that we don’t need use, lets remove them (optional) :

- Games

- Windows DVD Maker

- Windows Media Center

- Internet Printing Client

- Windows Fax and Scan

- Tablet PC components

- Windows Gadget Platform

- XPS Services

- XPS Viewer

Last item before Sysprep is to erase all event logs, you can do this by running this script in an elevated command prompt:

for /F "tokens=*" %1 in ('wevtutil.exe el') DO wevtutil.exe cl "%1"

Let’s get ready to Sysprep our OS Template and convert to a VM Template for our Gold Images.

Run sysprep.exe at choose Generalize, OOBE, Shutdown

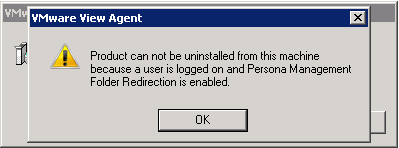

Right Click on VM and Choose Template > Convert to Template. Now that we have converted our OS Template into a VM Template we can proceed to cloning our template to build a Gold Image for View Pools. What’s nice about building a VM Template as opposed to moving to a Gold Image to start with is that now I can deploy a quick Test machine or build out Dedicated VM’s without affecting any other images. Also if you use vCloud Director, it will require having images as Templates for provisioning purposes.

Create Gold Images

Now that we have our OS Template built we can clone it to make a Gold Image. We maintain several Gold Images based on scope of work or department. Eventually we will move away from so many Gold Images and start utilizing Horizon Mirage (v2 now supports VDI). Until then let’s build out a Gold Image for our Accounting Department.

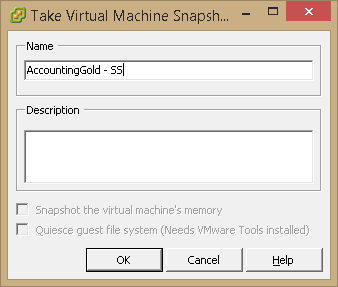

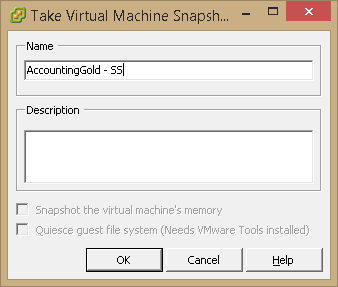

In vCenter let’s clone our Win7Template to make AccountingGold.

Each of our View Pools has specific hardware requirements based on the software they use, so let’s make some changes to address some accounting software that we will install. I will enlarge the hard drive to 72GB and increase RAM to 4GB.

Since we ran Sysprep on the OS Template VM we need to go through the basic Windows Install steps of the new Gold Image. Next join the machine to the domain and reboot. Some people say to join the domain last, but it has been my experience that most software packages are located on a central file server and it makes things easier to authenticate to AD and if you are trying to configure a DSN, SQL or AD specific application.

I like to tweak the display properties at this point by going to System Properties > Advanced System Settings > Advanced > Performance > Settings > Adjust for Best Performance. You can also set the Windows Theme to Windows Classic.

Install Core Company/Pool Specific Software

From here the sky is the limit on what you want to install for your Gold Images, we typically install MS Office, Flash, Chrome, Java, etc. For all applications we install, we always do a first run to ensure everything installed correctly. If you install MS Office re-enable Windows Update service and update Office (Internet or WSUS since we are in the Domain now). Once complete, disable Windows Update service and delete updates from:

C:\Windows\SoftwareDistribution\Download

So let’s assume you have installed everything that you want and are ready to provision this image for use by a View Pool, there are several things you want to tweak to make the linked-clone process go smoothly.

SDelete is a slack utility for Windows that will remove any slack in Windows partitions, since we are running our VM’s in Thin Provision I like to make sure my Gold Images are as compact as possible when the Replica’s are produced. You will need to download a copy of SDelete here. To start let’s run a check disk for the system partition:

chkdsk /f

Now we can run sdelete from a run command. NOTE: this will take some time

sdelete -z

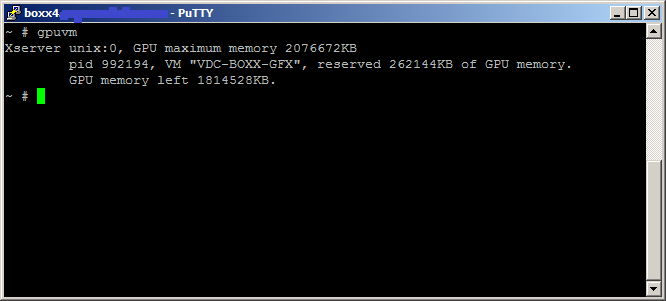

Now lets put the Host that our Gold Image is running on into SSH mode. Shutdown your Gold Image and open Putty or your preferred SSH client.

ls

cd vmfs

ls

cd volumes

ls

cd SPECIFIC DATASTORE (Case Sensitive)

ls

cd SPECIFIC VM (Case Sensitive)

ls

vmkfstools -K SPECIFIC VMDK FILE (Case Sensitive)

Hole Punching should take a while but will eventually finish.

Once that is complete, start your VM back up and run chkdsk /f one more time. Also be sure to disable SSH in vCenter. Clear the event logs one more time.

Now let’s build our shutdown script and we will be done with our Gold Image. We use this script to release the Windows KMS key, MS Office KMS Rearm, IP release and shutdown. Create a txt file on the desktop called “script.bat” right-click on bat file and edit, insert the following text and save:

"C:\Program Files (x86)\Common Files\microsoft shared\OfficeSoftwareProtectionPlatform\OSPPREARM"

slmgr /ckms

ipconfig /release

ipconfig /flushdns

shutdown -s -t 00

Run the script.bat file and your machine is now 100% ready to become a Parent Gold Image for a View Pool, you can take a snapshot and start provisioning a View Pool!

Up next in our 6 Part series is Part 2 – PCoIP Best Practices

VDI Performance Series Index

Part 1 – Gold Images

Part 2 – PCoIP Best Practices

Part 3 – Persistent vs Non-Persistent

Part 4 – Storage

Part 5 – End User Experience

Part 6 – Wrap Up and What’s Next

32.966144

-96.828249

You must be logged in to post a comment.